Safety-Aware Anomaly Detection and RUL Analytics for Industrial Machinery

Developing an auditable, safety-aware anomaly detection and Remaining Useful Life (RUL) analytics pipeline for industrial machinery, validated on our bottle inspection and sorting demonstrator, combining multi-sensor time-series learning, uncertainty-aware alarms, and human-in-the-loop verification.

Overview

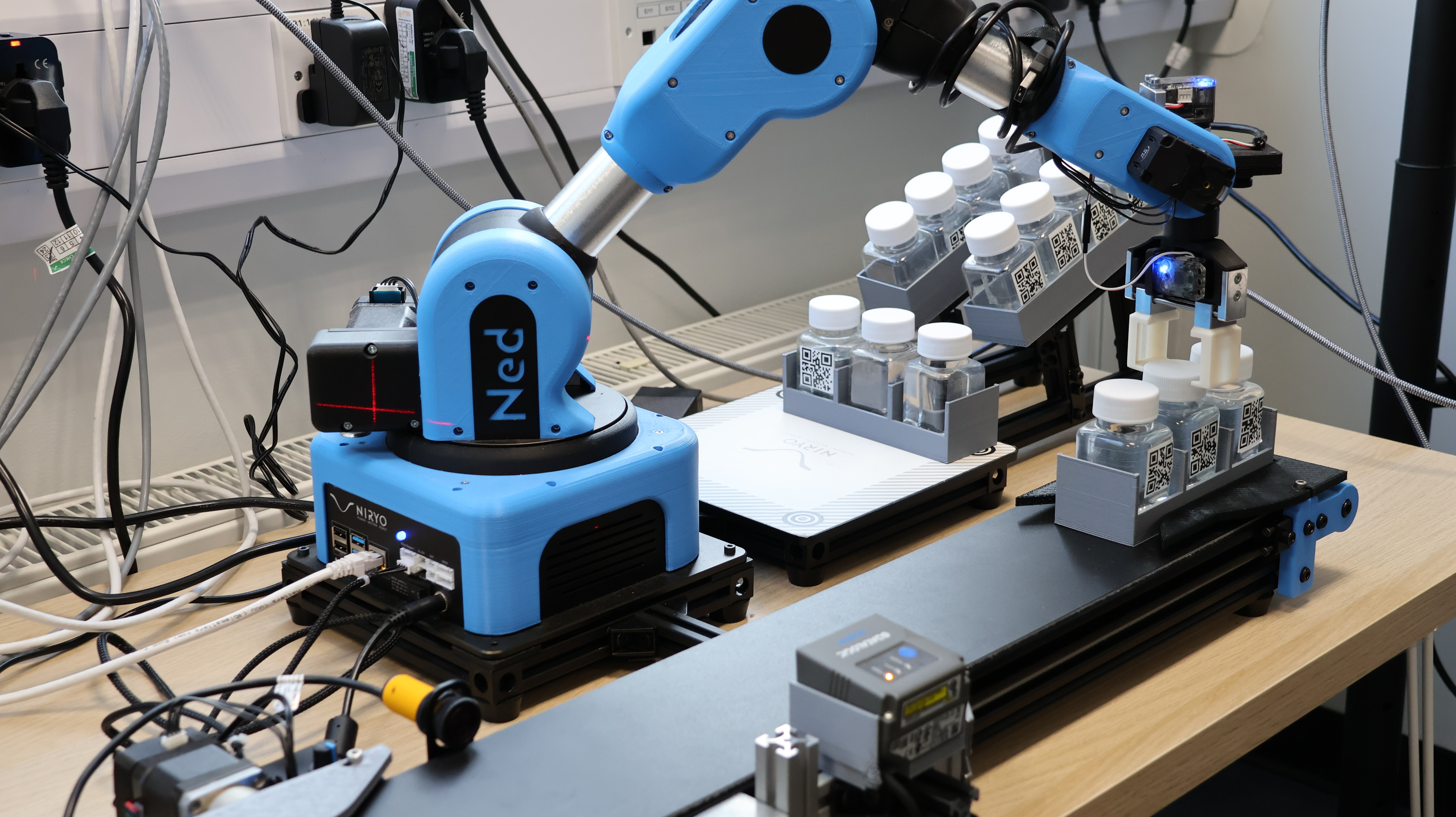

This project develops a safety-aware anomaly detection and RUL analytics pipeline for industrial machinery, grounded in demonstrator evidence rather than purely offline datasets. Using the bottle inspection and sorting system, we capture multi-sensor telemetry (signals from actuators, conveyors, inspection modules, and control logic), align it into machine-state segments, then learn normal operational signatures to detect deviation patterns early. Beyond detection, the framework estimates degradation trends and Remaining Useful Life with uncertainty bounds, enabling maintenance planning that is both operationally useful and defensible.

A core requirement is auditability, every alert is paired with traceable evidence (when the deviation started, which signals contributed, confidence/uncertainty, and operator validation outcome), enabling post hoc analysis, governance, and cross-shift handover.