Human-State Aware Human-Robot Collaborative Disassembly

Developing a human-centric HRC framework for high-value end-of-life (EoL) disassembly that adapts task allocation and robot behaviour to real-time operator state, combining cognitive and physiological modelling, negotiation-based collaboration, and a predictive digital twin to improve safety, ergonomics, and execution reliability.

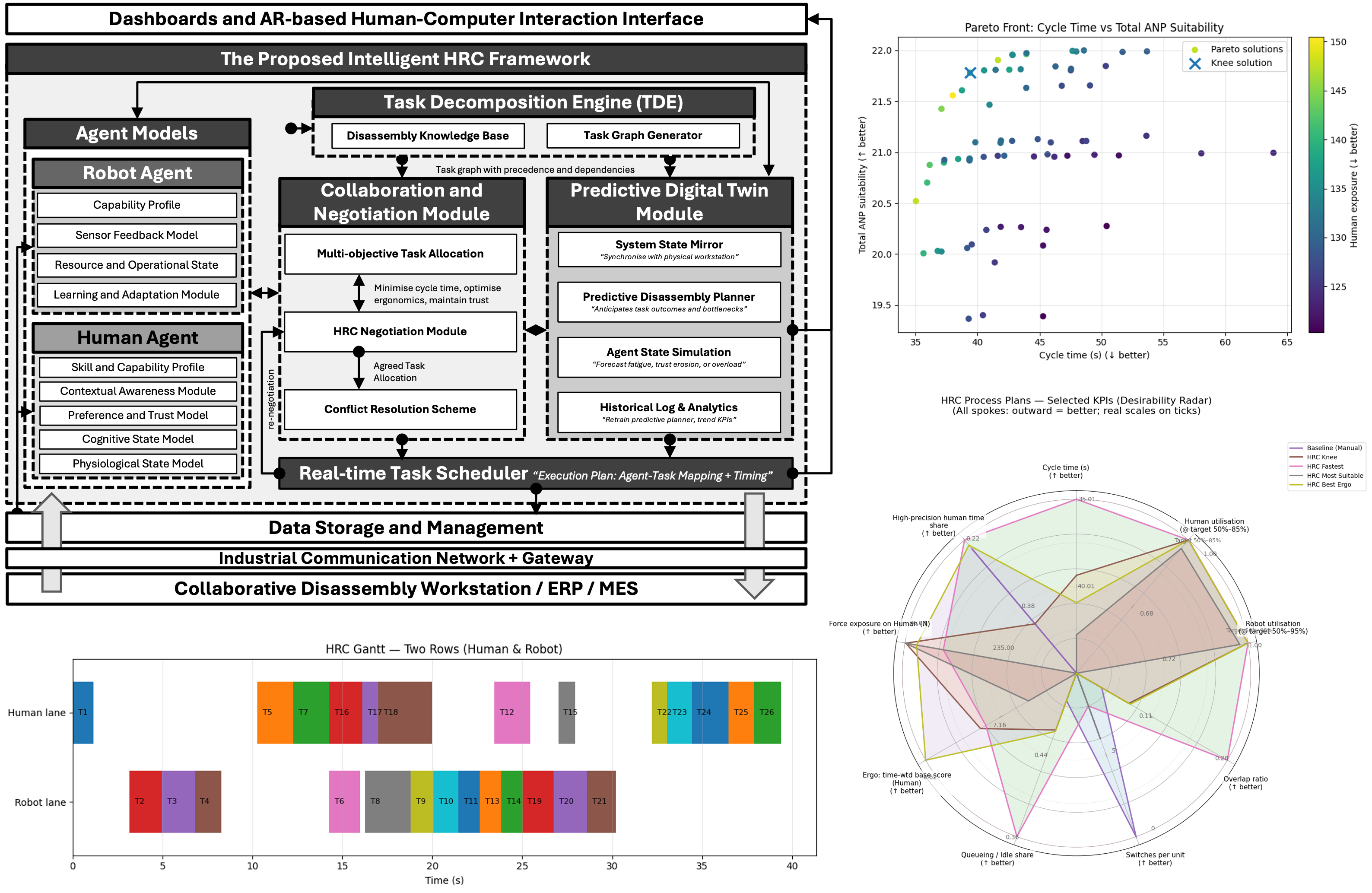

Overview

This project develops a human-state aware Human-Robot Collaboration (HRC) framework tailored for end-of-life (EoL) disassembly of high-value products, where uncertainty, variability, and safety constraints make static task allocation brittle. The central contribution is an integrated architecture in which human and robot agent models are continuously updated from operational data, including operator physiological and cognitive state proxies (for example fatigue, stress, workload), enabling collaboration policies that respond to human condition and task context rather than assuming a fixed-capacity operator.

A key design choice is negotiated, runtime task allocation, not purely centralised optimisation. The human agent can express preferences and constraints in-the-loop, and the system converges to an executable plan through negotiation and conflict resolution. A predictive digital twin provides a validation layer, simulating task-level and agent-level dynamics to anticipate KPI impacts, task duration drift, and fatigue accumulation before committing to allocations.