Vision-Enabled SAR for Manual Assembly

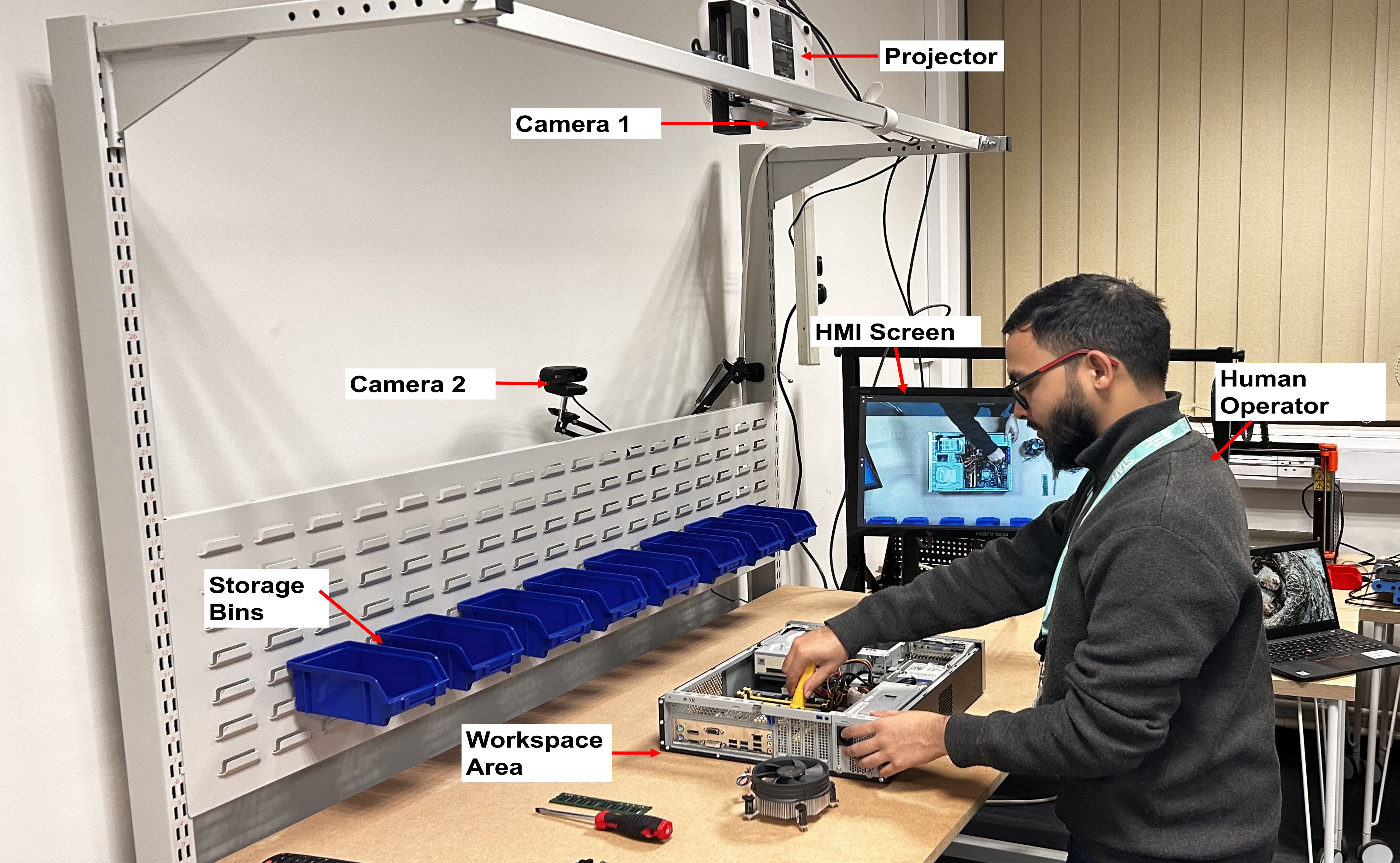

Developing a computer vision-enabled Spatial Augmented Reality framework towards human-centric smart assembly.

Overview

This project develops a human-centric Spatial Augmented Reality (SAR) system that projects adaptive, light-guided assembly instructions directly onto the workspace and is controlled through AI-based hand gesture recognition. The system delivers real-time guidance and error feedback without requiring handheld or wearable devices. User studies show significant reductions in task time, error rates, and perceived workload compared to conventional instruction methods.